When examining some data points within the security industry, three insights can be derived from the statistics. The first is related to the shortage of cybersecurity skills, an ongoing challenge that has persisted for years within the cybersecurity sector. Organizations realize how this shortage impacts their overall strategy. So, organizations have taken many initiatives to address the existing shortage of skills. The second insight concerns security team burnout, a significant issue within the field. Security teams frequently experience burnout, and there are several reasons behind this. One factor is the comparatively small size of the team, which is concerned with the substantial and evolving threats. Also, addressing the specific needs of the team is crucial in tackling the issue of burnout. The third insight relates to dealing with data dispersed across various sources and the need to correlate this disparate data. This involves integrating data from sources such as EDR systems and analyzing it to conduct incident investigations or derive meaningful insights. The main challenges in this process include the lack of contextual information when correlating data from different sources, the siloed approach, and the decluttered data.

Considering this perspective, let's examine the past, present, and future approaches to cybersecurity. In the past, the main strategy resembled a castle-and-moat model, emphasizing fortified perimeters and defenses. In the present, organizations are transitioning to an anticipation stage in cybersecurity. Here, the focus shifts from whether a cyberattack will occur to when it will happen and how to minimize the risk of such incidents within the organization. The future involves leveraging AI-powered technologies to handle AI-driven threats while providing organizations with advanced AI tools capable of addressing existing challenges.

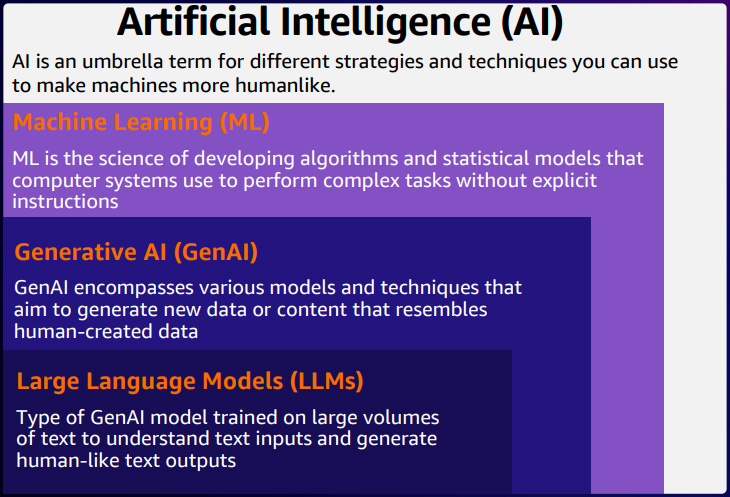

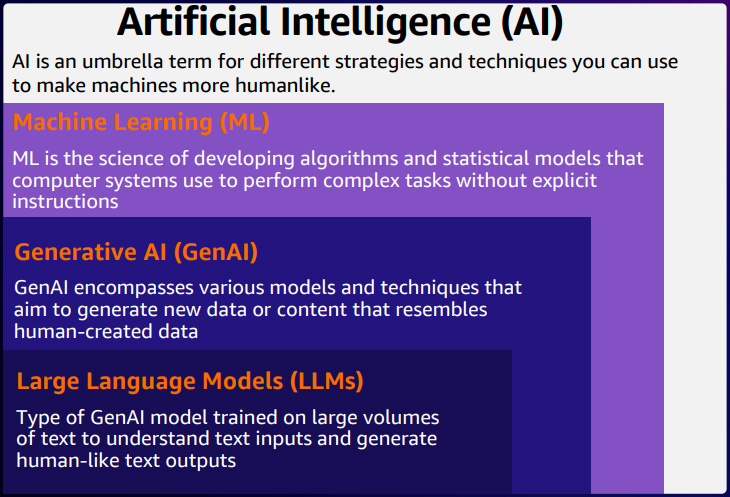

What is Artificial Intelligence?

Simply put, AI refers to machines that simulate the human mind through techniques, tools, or rules. Machine learning involves using algorithms to produce outputs without explicit instructions. Generative AI, on the other hand, generates new data that closely resembles human-created data, such as text, images, or music. Language learning models specifically focus on generating text; when prompted with a question, the machine responds with text. The key distinction among these concepts lies in determining which form of AI can effectively strengthen cybersecurity measures.

Challenges of AI

An AI system must be trustworthy, consisting of characteristics such as safety, reliability, validity, accountability, transparency, freedom from bias, and interpretability. This definition derives from the NIST Risk Management Framework, indicating that all these attributes should ideally be present in an AI system. However, achieving all these characteristics is challenging in reality. In AI development, deployment, and use, trade-offs between these characteristics often arise. Therefore, meeting all criteria becomes impractical. You may have faced challenges, such as biased data or inaccurate outputs due to the failure of that specific AI system to meet all the required characteristics.

Another challenge is regulatory uncertainty. As AI continues to evolve rapidly, new developments emerge every day. Governments worldwide are working on regulations to govern AI, such as the EU AI Regulatory Act. While these frameworks and guidelines provide some direction on the appropriate use of AI, uncertainty persists due to the dynamic nature of AI technology. In Canada and several other countries, a horizontal approach is being adopted. This involves categorizing AI systems as high-risk or low-risk and then designing corresponding obligations to meet regulatory AI requirements. The US and UK are taking a vertical approach. Here, the focus is on assessing how AI systems adapt to specific industries or applications before categorizing them as high-risk or low-risk. Similarly, Singapore's AI framework also classifies AI systems as high-risk or low-risk based on their use and application.

When considering resources essential for AI implementation, it's crucial to focus on three main components: data, compute resources, and human expertise. A diverse array of high-quality datasets is needed to function in AI systems. Secondly, compute resources contain the infrastructure required for successful AI operation and desired outcomes. This infrastructure investment often costs a significant amount due to the extensive resources. Thirdly, human expertise is essential. Skilled individuals skilled in machine learning, deep learning, and algorithm development help to advance AI initiatives. When these three aspects come together, they form the foundation for realizing an AI system and its effective utilization.

Accuracy in AI refers to producing reliable and precise outputs when asked a question. AI systems, categorized into supervised learning, unsupervised learning, and others, are evaluated based on how well their outputs align with the queries. While many may have interacted with popular AI models like GPT, it's important to note that their outputs aren't always accurate. For instance, when asking a question about a fact, a GPT model might provide incorrect answers. Providing feedback to these models helps them learn from their mistakes and improve their accuracy. However, a significant challenge lies in ensuring the accuracy and freedom of bias in the datasets on which these AI systems are trained. It's essential to verify whether the data accurately represents the intended domain, addresses the right questions, and delivers correct answers.

Use of AI/ML in cybersecurity events

Prompt injection attacks

Prompt injection attacks involve manipulating AI systems to generate unexpected behaviors. These attacks include manipulating inputs to drive the AI system into producing wrong outputs. Adversaries with malicious intent manipulate data inputs, causing the AI system to generate incorrect outputs.

AI-powered business email access

AI-powered business email campaigns have a significant risk for malicious use, particularly in the context of phishing emails. In the past, common indicators of phishing emails included spelling, grammatical errors, and other signs. However, adversaries now leverage GPTs to write perfect emails without such indicators. One might question why adversaries didn't write perfect phishing emails before, but the reality is that with GPTs, writing such emails takes mere seconds. Moreover, these AI models can adapt to the specific context of the recipient, making them even more convincing. Previously, phishing emails might have been easily identifiable because they were unrelated to the recipient's workplace, but with GPTs, adversaries can tailor emails to fit into the recipient's context.

AI-created malware

AI-created malware poses a significant threat to organizations. Examples include BlackMamba, a proof of concept malware, and Predator AI, an AI-powered tool targeting cloud applications. These instances underscore the growing risk AI-powered malware poses in compromising organizational security.

Deepfakes

Another concerning issue is deep fakes, which have become increasingly prevalent over the years. These manipulations involve morphing celebrities' faces to convey fabricated messages, often impacting political discourse or geopolitical matters. With deep fakes being consistently used, their prevalence is expected to rise in the future.

Data poisoning

Data poisoning involves contaminating datasets to produce malicious outputs. This issue arises when a large dataset is poisoned with incorrect or inappropriate data. Therefore, the AI system generates outputs irrelevant to the intended purpose or business processes. This initiates a chain reaction of damaging consequences.

How is AI used in the cloud?

Build better - Cloud providers integrate AI components to develop and host new AI services. A notable example is AWS SageMaker, which assists developers in building and training models.

Secure more - There's a significant focus on developing new AI security tools and technologies supporting defenses across cloud environments. AI plays a crucial role in various applications, including threat detection, anomaly detection, and analyzing usage patterns within the cloud. These tools help organizations identify irregular resource usage that deviates from their typical patterns.

Analyze everything - Utilizing sentiment analysis and data analysis extends beyond the cybersecurity perspective. These techniques provide valuable business insights by identifying patterns and facilitating decision-making across various business domains.

Improve experience - This refers to AI assistance provided to end-users, which enhances their experiences through features like chatbots. Organizations also leverage insights obtained from end-user interactions with AI assistance to refine business processes and improve decision-making capabilities.

How does AI affect the cloud?

A typical ransomware incident unfolds in several stages. Initially, threat actors gain entry into an organization, followed by lateral movement within the network. The next step involves either encrypting data or exfiltrating it. While this is a typical progression, the specific approach may vary. Attackers might exploit vulnerabilities in the cloud, utilize social engineering tactics, or capitalize on unpatched vulnerabilities within the organization. Once ransomware infiltrates the organization, it rapidly spreads throughout the cloud environment, targeting data stored in cloud storage. Attackers may leverage APIs to propagate the attack further, encrypting and exfiltrating data as they progress.

The potential of AI in enhancing cybersecurity - Understanding human and machine capabilities

Machines can process massive amounts of data and derive valuable insights. Humans can then leverage these insights to strategize and optimize various processes. By collaborating with AI tools or systems, individuals can identify areas within their workflows where incorporating AI can enhance productivity and reduce inefficiencies. This partnership between human expertise and machine capabilities drives effective decision-making and process improvement initiatives.

Reference:

AWS Events