What is Real-Time Analytics in Microsoft Fabric?

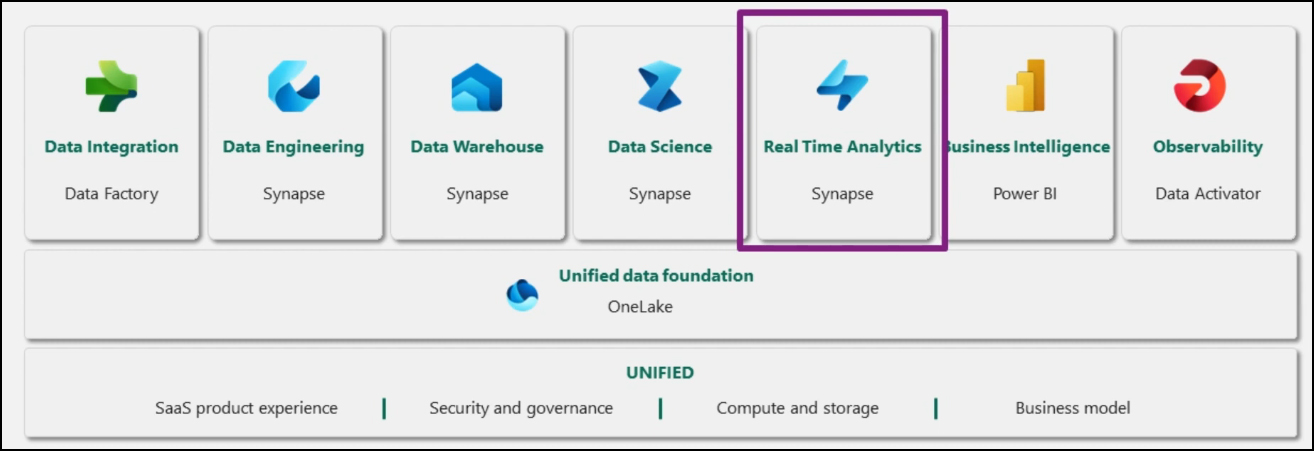

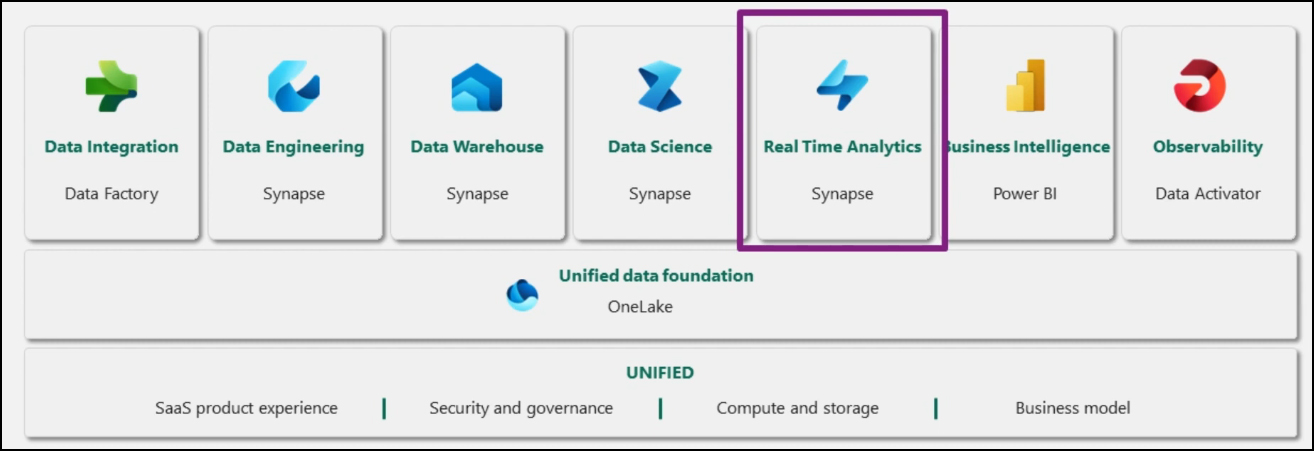

Microsoft Fabric integrates Data Factory, Synapse Analytics, Data Explorer, and Power BI into a unified cloud platform. Its open and governed data lakehouse foundation is cost-effective and performance-optimized, supporting business intelligence, machine learning, and AI workloads at any scale. Serving as the core platform for migrating and modernizing existing analytics solutions, it accommodates both speed data appliances and traditional data warehouses. The Microsoft Fabric SaaS environment simplifies the deployment of a comprehensive end-to-end analytics engine, enabling faster implementation. Built-in security and governance features ensure your data and insights are fully protected.

Synapse Real-Time Analytics is a key component of Microsoft Fabric, serving as a fully managed big data analytics platform optimized for streaming and time-series data. It leverages a powerful query language and an engine designed for high performance, enabling the search of structured, semi-structured, and unstructured data. Fully integrated with the entire suite of Microsoft Fabric products, real-time analytics supports data loading, transformation, and advanced visualization. Unlike traditional data lakehouse or data warehouse analytics, Microsoft Fabric enables real-time analytics by ingesting streaming data through multiple methods. Users can leverage tools such as Event Hub, IoT Hub, pipelines, data flows, notebooks, or open-source solutions such as Kafka, Logstash, and many more.

Once streaming data is ingested into Microsoft Fabric, it can be stored in Kusto DB and mirrored into the data lakehouse. After storage, machine learning models can be trained and tested directly on the data lakehouse. As with other scenarios, business users can analyze and visualize data through Power BI, using See-Through mode or SQL endpoints. Further, data can be accessed and processed using KQL (Kusto Query Language) or notebooks powered by Spark.

Data ingestion is the process of loading data from one or more sources into real-time analytics within Microsoft Fabric. Once the data is ingested, it becomes available for querying. Kusto supports two modes of data ingestion: batching and streaming.

Batching ingestion

Batching ingestion involves grouping data into batches and optimizing it for high ingestion throughput. This method is the preferred and most efficient type of ingestion, as data is batched based on specific ingestion properties. These smaller batches are then merged and optimized for fast query performance.

Streaming ingestion

Streaming ingestion involves continuously ingesting data from streaming sources, enabling near real-time latency for small data sets per table. The data is initially ingested into the row store and later moved to column store extents for optimized performance. Streaming ingestion can be achieved using the Kusto client library or one of the supported data pipelines.

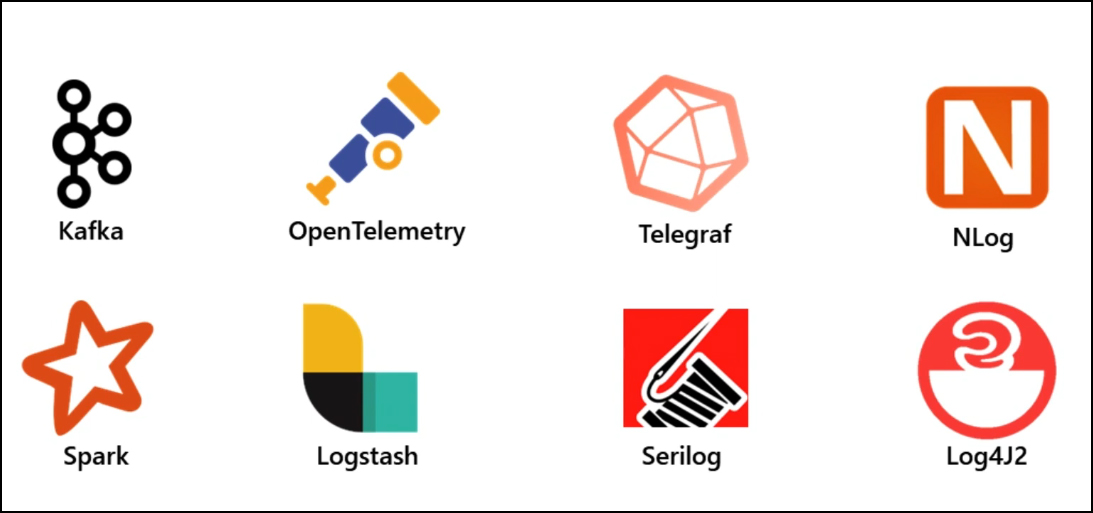

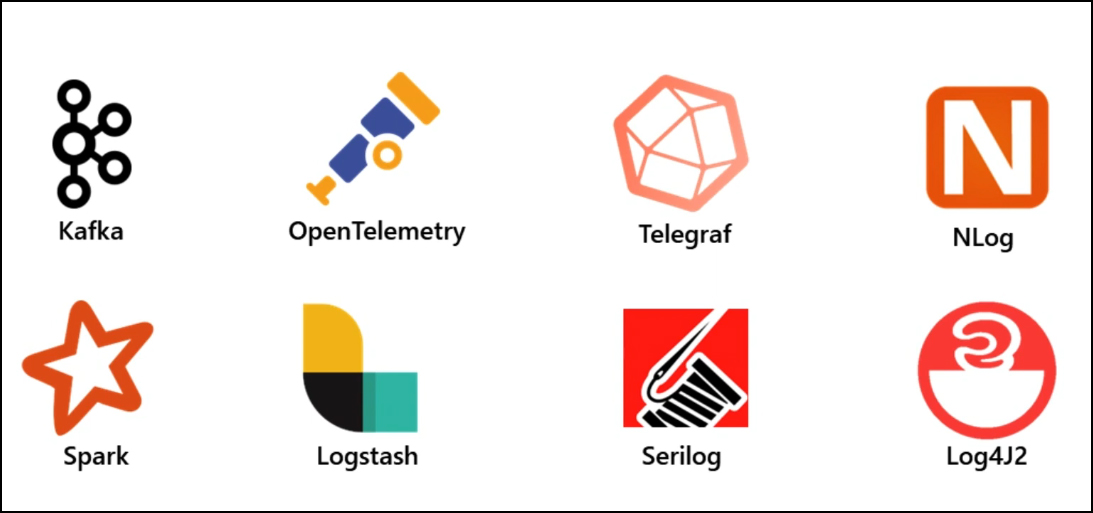

Kusto, the core engine of real-time analytics in Microsoft Fabric, offers various data ingestion connectors. These open-source connectors integrate seamlessly with real-time analytics in Microsoft Fabric, ensuring efficient and flexible data ingestion.

Open-source Connectors

Kusto supports several open-source platforms for data ingestion, including the following:

Apache Kafka

Kusto supports data ingestion from Apache Kafka, a distributed streaming platform designed for building real-time data pipelines that reliably transfer data between systems and applications.

Apache Spark

Kusto integrates with Apache Spark, a unified analytics engine optimized for large-scale data processing.

OpenTelemetry

OpenTelemetry (OTel) is an open-source observability framework consisting of a suite of tools, APIs, and SDKs. It allows IT teams to instrument applications and generate, collect, and export telemetry data, enabling detailed analysis and a deeper understanding of software performance and behavior.

Logstash

Logstash, the L in the ELK stack, aggregates data from various sources, processes it, and sends it through the pipeline, typically for indexing in Elasticsearch. However, in the context of Microsoft Fabric, Logstash integrates with real-time analytics instead.

Telegraf

Telegraf is a server-based agent that collects and transmits metrics and events from databases, systems, and IoT sensors. Written in Go, Telegraf compiles into a single binary with no external dependencies and requires minimal memory, making it lightweight and efficient.

Serilog

Serilog is a widely used logging framework for .NET applications. It allows developers to fine-tune their control over which log statements are output based on factors such as the logger's name, log level, and message pattern.

NLog

NLog is a flexible, open-source logging framework for various .NET applications, including .NET Standard. It enables developers to write log data to multiple targets, such as databases, files, or the console.

Log4J2

Apache Log4J2 is a Java-based logging utility that is part of the Apache Logging Services project and part of the Apache Software Foundation.

A detailed examination of these connectors follows.

Apache Kafka Sink to Kusto

You can ingest data into Kusto from Kafka using the Apache Kafka Sink, built on Kafka Connect. Kafka Connect is a robust tool designed for scalable and reliable data streaming between Apache Kafka and other systems. The Kafka Sink acts as a connector for Kafka and requires no coding. Certified by Confluent, the Kafka Sink has undergone extensive review and testing to ensure quality, feature completeness, compliance with standards, and optimal performance. This is an ingestion-only connector supporting both batching and streaming modes. Common use cases include importing logs, telemetry, and messages from source systems such as servers, IoT devices, and databases for analytics. The connector is built in Java using the Kusto Java SDK.

Apache Spark Connector for Kusto

The Spark Connector is an open-source project that can operate on any Spark cluster. It provides both data source and data sync functionalities for transferring data to and from Spark clusters. By using the Apache Spark Connector, you can develop fast and scalable applications for data-driven scenarios, such as machine learning, extract-transform-load (ETL), and log analytics. With this connector, your database becomes a valid data store for standard Spark operations, including reading, writing, and live streaming. Integrating real-time analytics with Spark allows you to build high-performance, scalable applications targeting a range of use cases, including machine learning, ETL workloads, log analytics, and other data-driven scenarios. The connector enables you to write to KQL DB in both batch and streaming modes.

OpenTelemetry Exporter for Kusto

OpenTelemetry (OTel) is a framework for application observability hosted by CNCF, which provides standard interfaces for collecting observability data, including metrics, logs, and traces. The OTel collector consists of three key components:

Receivers – Handle the process of receiving data into the collector.

Processors – Define what actions are taken with the received data.

Exporters – Responsible for sending the data to its destination.

The OpenTelemetry Connector supports data ingestion from various receivers into KQL DB in Kusto batching and streaming modes. It serves as a bridge to ingest data generated by OpenTelemetry into your database, allowing you to customize the exported data format to meet your specific needs. Primary use cases for the connector include ingesting logs, metrics, and traces. A trace tracks the complete journey of a request or action as it moves through the nodes of a distributed system, especially in containerized applications or microservices architectures. The OTel exporter to Kusto is built on the Kusto Go SDK.

Logstash Output Plugin for Kusto

For organizations using the ELK Stack, migrating to a big data platform may seem lengthy and complex, but this isn't always the case. Transitioning from ELK to real-time analytics can significantly boost performance, lower costs, and enhance the quality of insights through advanced query capabilities, all without requiring a complex migration. This is enabled by the Logstash Kusto Plugin. The Logstash Plugin allows you to process events from Logstash into KQL DB for later analysis. It supports data ingestion from multiple sources simultaneously, enabling you to transform and send data to your preferred destination, real-time analytics in this case. The plugin is built on the Kusto Java SDK and supports batching for efficient data processing.

Telegraf Output Plugin for Kusto

Telegraf is an open-source, lightweight agent with a minimal memory footprint, designed for collecting, processing, and writing telemetry data, including logs, metrics, and IoT data. It supports hundreds of input and output plugins and is widely used and well-supported by the open-source community. The output plugin acts as a connector from Telegraf, supporting the ingestion of data from various input plugins into your database. It can be used in different scenarios, such as collecting telemetry from Docker containers, Kubernetes environments, Windows event logs, or any supported input plugins, to ingest logs, metrics, and IoT data into a KQL DB to extract insights. The Telegraf plugin supports both batching and streaming and is built on the Go SDK.

Kusto Forwarders: Serilog, NLog, and Log4J2

In addition to connectors, Kusto supports forwarders or appenders, which capture information from a logger and write log messages to a file or other storage locations.

The Serilog sink, an appender, streams log data directly to your database, enabling real-time analysis and visualization of logs.

The NLog sink serves as a target for NLog, enabling you to send log messages directly to your Kusto DB. This plugin offers an efficient method for synchronizing logs with your Kusto cluster.

The Apache Log4J2 sink allows you to stream log data from Java applications to your KQL DB, providing real-time capabilities for analyzing and visualizing logs.

These connectors and forwarders allow you to send high volumes and varieties of data with high velocity to your KQL DB for real-time analytics in Microsoft Fabric.

Reference:

Microsoft Events