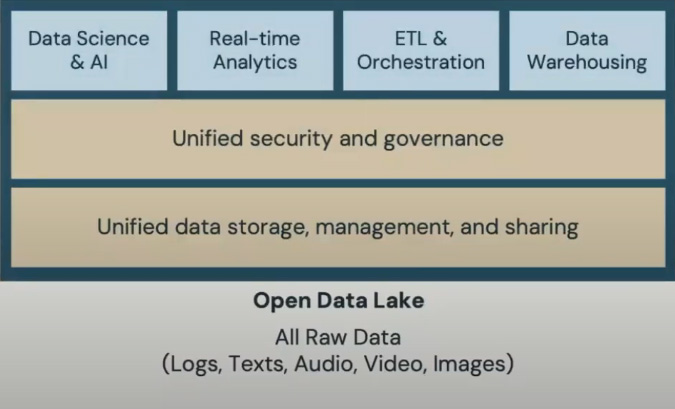

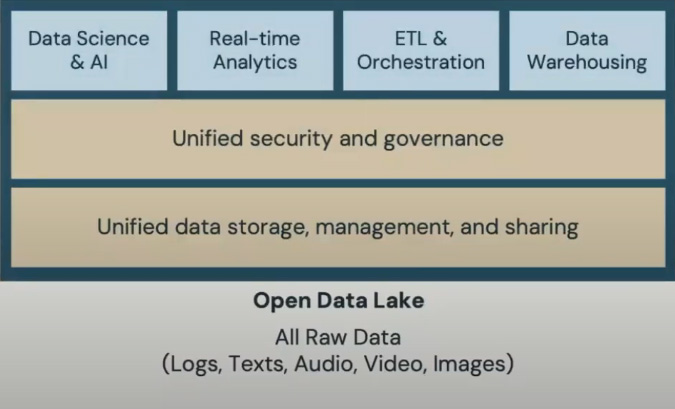

Today's data and AI landscape is clear: the most successful organizations will effectively leverage data and AI. However, executing this strategy has historically been challenging. In many enterprises today, organizations must integrate multiple systems: a data lake for unstructured data, an orchestration and ETL stack, one or more data warehouses supporting various BI systems, a dedicated stack for data science and machine learning, and yet another system for real-time analytics. Each of these components requires its own unique governance approach. This fragmented approach creates several challenges within an organization. First, data silos significantly increase costs inside of the organization, both financially and operationally. Also, inconsistent governance undermines trust in the data, making it difficult to determine what data is available and which source should be used for a specific analytics workload. Lastly, the use of multiple stacks, tools, and programming languages creates inefficiencies in collaboration between teams, such as data engineering, data analytics, and data science. These complexities drive up costs and make execution more difficult.

The Data Lakehouse

The Lakehouse architecture offers a more efficient solution. All data is first stored in the data lake, creating a unified foundation. A consistent approach is then applied to data storage, management, and sharing, ensuring uniformity across the system. On top of this, a single governance framework manages both structured and unstructured data. Finally, all data and AI workloads access this centralized source of truth through the same governance model, streamlining operations and improving data integrity.

Azure Databricks Lakehouse Platform

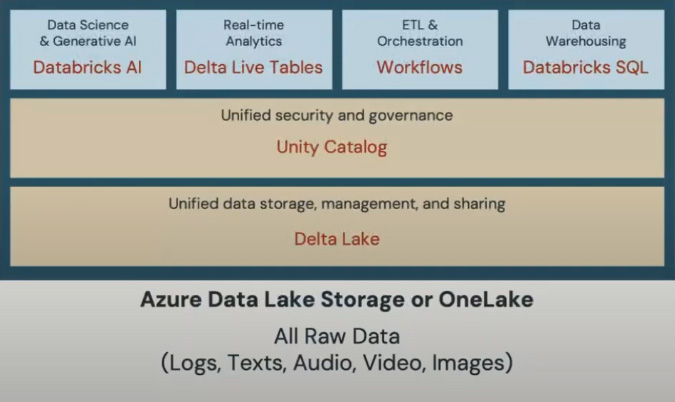

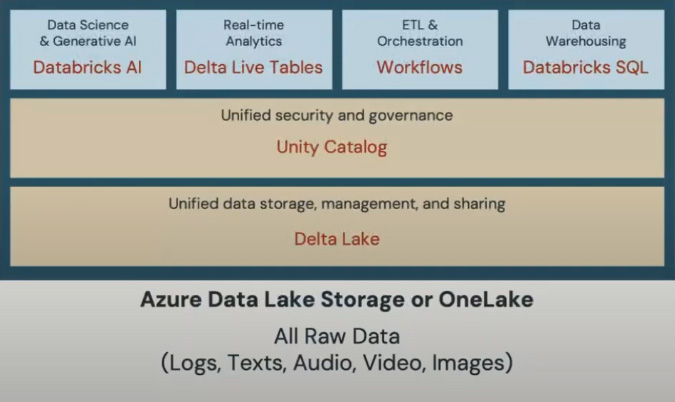

The Azure Databricks Lakehouse Platform, introduced in 2020, has become the standard approach for data and analytics across the Azure Cloud. Today, thousands of organizations, from large enterprises to startups, build their Lakehouses on Databricks. The benefits of this approach are well-documented. In collaboration with Nucleus Research, Databricks analyzed customer adoption using accounting principles to measure impact. The findings revealed an average ROI of over 480%, with payback periods for new workloads averaging just over four months. Also, customers reported significant productivity gains across their operations.

Components of the Azure Databricks Lakehouse Platform

Delta Lake

Delta Lake is the key to providing unified data storage, management, and sharing on the platform. One of its biggest strengths is its ability to support all major Lakehouse formats available today. Not only does it generate metadata for Delta Lake itself, but it can also automatically create metadata for Apache Hudi and Apache Iceberg when interacting with systems built on these formats. This means that by building for Delta Lake, you are inherently building for a broad ecosystem. Also, Delta Lake includes Delta Sharing, a fully open standard for securely exchanging data. With Delta Sharing, organizations no longer need both sides of a data-sharing setup to be on the same platform. As long as both platforms recognize the Delta Lake format, they can share data without replication. Delta Lake is open-source and has a thriving community of over 500 contributors actively supporting and enhancing the project.

Unity Catalog

Unity Catalog is the governance solution for Azure Databricks, offering a standardized approach to managing both structured and unstructured data. It extends governance beyond just tables and files, incorporating models, dashboards, and notebooks to create a unified governance framework across the platform. A key feature of Unity Catalog is natural language search and data discovery, powered by LakehouseIQ, an embedded large language model. This enables users to locate and understand their data using conversational queries. Also, Lakehouse Monitoring provides AI-driven monitoring capabilities, automatically generating metrics, dashboards, and alerts to track workload performance. This helps organizations assess both technical and business impact in real time. For example, it can detect unexpected transmission of personally identifiable information (PII) and trigger alerts to ensure compliance and security.

Databricks SQL

Databricks SQL delivers a comprehensive data warehousing solution directly on your Lakehouse. It is fully serverless, eliminating the complexities of infrastructure management and allowing for seamless scalability. Powered by AI-driven query optimization, Databricks SQL learns from past query patterns to continuously refine execution plans. This results in improved performance and efficiency over time, ensuring faster and more cost-effective queries. Built on ANSI SQL and leveraging open APIs, Databricks SQL provides full compatibility with industry standards. This ensures that organizations can build a flexible, future-proof data warehouse without being locked into proprietary technologies.

Databricks Workflows

Databricks Workflows provides a single orchestration solution for managing tasks across any Lakehouse scenario. It supports both scheduled workflows for batch data ingestion at preset intervals and real-time data pipelines that run continuously. Powered by AI-driven automation, Databricks Workflows optimizes job execution, remediation, and auto-scaling, ensuring high reliability. A comprehensive workflow monitoring dashboard provides full visibility into every run, while automated failure notifications help teams stay proactive. Alerts can be sent via email, Slack, PagerDuty, or any custom webhook, allowing teams to troubleshoot and resolve issues before they impact data consumers.

Delta Live Tables

Delta Live Tables streamlines real-time analytics with a declarative approach using SQL or Python, reducing the complexity of data engineering. As you build and deploy streaming data pipelines, Databricks automates critical production tasks, including auto-scaling infrastructure to meet workload demands, pipeline dependency orchestration for seamless execution, error handling and recovery to maintain reliability, and performance optimization tailored to each workload. Also, Delta Live Tables includes automatic data quality testing and exception management, minimizing operational overhead so teams can focus on extracting insights rather than maintaining infrastructure.

Databricks AI

Databricks AI enables organizations to maintain full control and privacy over their models and data while simplifying AI deployment. It provides a unified workflow that streamlines the entire model-building process. Also, Databricks AI is designed for cost efficiency, ensuring organizations can scale AI initiatives without unnecessary expenses.

Challenges organizations face with Generative AI

Generative AI introduces several challenges that organizations must address:

Data and Model Control: Many AI services require sending data outside an organization's environment, increasing the risk of data leaks. Also, there is uncertainty around IP ownership, particularly regarding model weights generated by third-party services.

Operational Complexity: Building generative models involves scaling complex operations, ensuring efficient execution, and monitoring model quality and accuracy in production, tasks that have become increasingly difficult.

High Training Costs: Training LLMs demands massive computational resources, following a hard-to-optimize process. This makes LLM development expensive for many organizations, limiting their ability to build and customize models in-house.

Advantages of Azure Databricks

Complete control

Azure Databricks provides complete ownership over models and data, ensuring privacy, compliance, and protection of intellectual property. Organizations can leverage their enterprise data to build generative AI solutions that best fit their needs. Whether by augmenting an existing open-source model, enhancing a SaaS-based AI model through RAG, or developing a fully custom model, Databricks enables full flexibility without compromising control. Since all AI development takes place within an organization's environment, data never has to leave. This approach ensures compliance with security standards while giving businesses full control over their model outputs and intellectual property.

Unified workflow

Another key advantage of Azure Databricks is its unified workflow, which reduces operational complexity. Databricks manages every stage of AI development, from data ingestion and featurization to model building, tuning, and deployment into production, all within a single platform. With built-in data management and governance capabilities, organizations can ensure that generative AI development aligns with their broader data strategy. The platform also provides end-to-end lineage and auditability, making tracking data from its source to production easier. This level of transparency simplifies model explainability, ensuring organizations can understand how models are trained and operated.

Cost effective

Azure Databricks provides significant cost advantages for AI workloads by leveraging an optimized software stack specifically designed for training large models. Through increased compute utilization, automated recovery, intelligent memory management, and efficient streaming data handling, Databricks AI maximizes performance while minimizing resource consumption. This optimization has led to cost reductions of up to 10x for generative AI use cases. A notable example is the training in Stable Diffusion, a well-known text-to-image model. Stability AI, the company behind Stable Diffusion, originally reported that training the model took approximately $600,000 monthly. However, using Databricks AI, the same model was trained for less than $50,000 in under seven days, demonstrating the platform's ability to cut costs while accelerating AI development significantly.

Conclusion

The Azure Databricks Lakehouse Platform delivers three major advantages for organizations looking to streamline their data and AI operations. First, it unifies structured and unstructured data, reducing TCO and accelerating time to value by simplifying data management. This eliminates the complexity of managing separate storage and analytics systems. Second, it standardizes governance across all data and analytics workloads, providing complete visibility into data lineage, access, and usage. Organizations can better understand where their data originates, how it moves across the business, and who uses it, ensuring compliance and security. Finally, the platform centralizes all data workloads on a single source of truth, supporting data warehousing, ETL and orchestration, real-time analytics, and AI workloads, including generative AI. By consolidating these functions, Azure Databricks enhances efficiency, improves collaboration, and accelerates innovation across the entire data ecosystem.

Reference:

Microsoft Events